How to Manually Decode Event Logs

A step-by-step guide to help understand the what, why and how to manually decode event logs.

Event logs are records generated when a smart contract emits an event. These logs provide additional details about state changes—modifications to a contract's data storage—resulting from executed transactions. For instance, a token transfer, which alters sender and receiver balances, qualifies as a state change recorded in the event logs.

Each event log record consists of both topics and data. While topics are indexed parameters of an event, data contains the non-indexed parameters.

Understanding Topics and Data

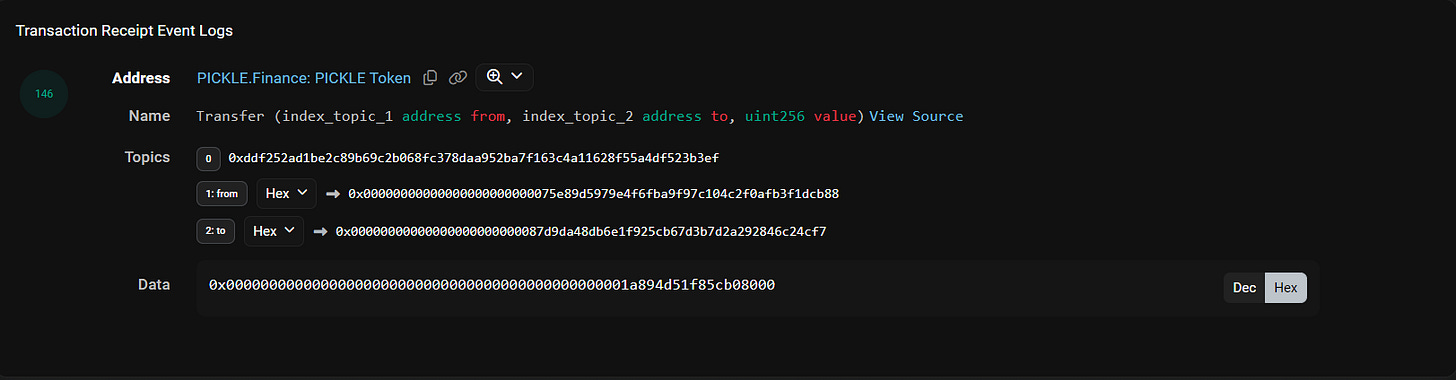

Consider a basic transfer event log:

Transfer(address indexed from, address indexed to, uint256 value)The first part of an event log consists of an array of topics, which are 32-byte (256-bit) characters used to describe what’s happening in an event. These are essentially indexed parameters, meaning they can be searched for specific values. The topics contain the event name and parameters, which include topic0, topic1, topic2, and topic3.

The first topic is always the signature of the event (a keccak256 hash) of the event's name and its parameter types. There can be up to three additional topics.

For the Transfer event mentioned above, the topics would be:

Topic 0: The event signature

Topic 1: The

fromaddressTopic 2: The

toaddress

Data is the second part of event logs, containing non-indexed parameters that cannot be directly searched. Unlike topics, which are limited to 32 bytes, data can hold any data type, including complex structures like tuples and strings, providing more detailed information about the event.

In the Transfer event, the value would be included in the data section.

Now, put on your Onchain Data Analyst hat, and let's learn how to decode topics and data from event logs manually.

Decoding Event Logs on Ethereum

Decoding event logs involves converting raw data from blockchain transactions into human-readable information. The goal of this process is to map each parameter in the topic and data fields to their corresponding data types.

In this walkthrough, we will decode the event log of an ERC20 transfer event from the PICKLE token.

The PICKLE Transfer event contains the sender, the recipient, and the amount of tokens transferred:

Transfer(address indexed from, address indexed to, uint256 value)The event logs are identified as follows:

Topic 0: The event signature

Topic 1: The

fromaddressTopic 2: The

toaddressData: the

valueamount

Now, let me show you how to decode each parameter manually.

Topic 0: The Event Signature

To find the event signature (Topic 0), you need to normalize the event by removing spaces, parameter names, and keywords. Then, apply the Keccak-256 hashing function or use an online hashing tool to calculate the Keccak256 hash.

The normalized signature is:

Transfer(address,address,uint256)Using this Keccak-256 tool, or Keccak function in Dune, input the normalized signature:

keccak(‘Transfer(address,address,uint256)’)The output will be the following hash value:

ddf252ad1be2c89b69c2b068fc378daa952ba7f163c4a11628f55a4df523b3ef

Finally, concatenate it with ‘0x’, and you will have the event signature as

topic0 = 0xddf252ad1be2c89b69c2b068fc378daa952ba7f163c4a11628f55a4df523b3efTopic 1: The from Address

The data type for Topic 1 is an address. Therefore, our goal is to decode it from its current hex value into the standard address format.

A standard EVM address has 42 characters, but the hex value of the address below includes 24 additional leading zeros for encoding purposes:

0x00000000000000000000000075e89d5979e4f6fba9f97c104c2f0afb3f1dcb88To correctly decode this address, we need to use the substring function to extract characters from the 25th to the 42nd position. We then concatenate the result with `0x` to convert it into the standard Ethereum address format.

Using Dune SQL, the query for decoding Topic 1 will be:

'0x' || lower(substring(to_hex(topic1), 25, 42)) as decoded_topic1The decoded address should return:

0x75e89d5979e4f6fba9f97c104c2f0afb3f1dcb88

Topic 2: The to Address

Since both Topic 1 and Topic 2 are addresses, we can simply replicate the process explained above.

Using Dune SQL, the query for decoding Topic 2 will be:

0x87d9da48db6e1f925cb67d3b7d2a292846c24cf7Data: The value

The value column in the data logs is of the integer data type. To convert from hexadecimal to integer we use the bytearray function on Dune and apply a substring operation to extract the 32-byte character sequence:

bytearray_to_uint256(bytearray_substring(data, 1, 32)) AS amountThe output will be an integer representing the value of tokens transferred from the sender (Topic 1) to the receiver address (Topic 2).

Our final query for decoding all topics and data in the PICKLE Transfer Event will be:

select

keccak(to_utf8('Transfer(address,address,uint256)')) as topic0

, '0x' || lower (substring(to_hex(topic1),25, 42)) as decoded_topic1

, '0x' || lower (substring(to_hex(topic2),25, 42)) as decoded_topic2

,bytearray_to_uint256(bytearray_substring(data, 1,32)) as amount

from ethereum.logs

where tx_hash = 0x2bb7c8283b782355875fa37d05e4bd962519ea294678a3dcf2fdffbbd0761bc5

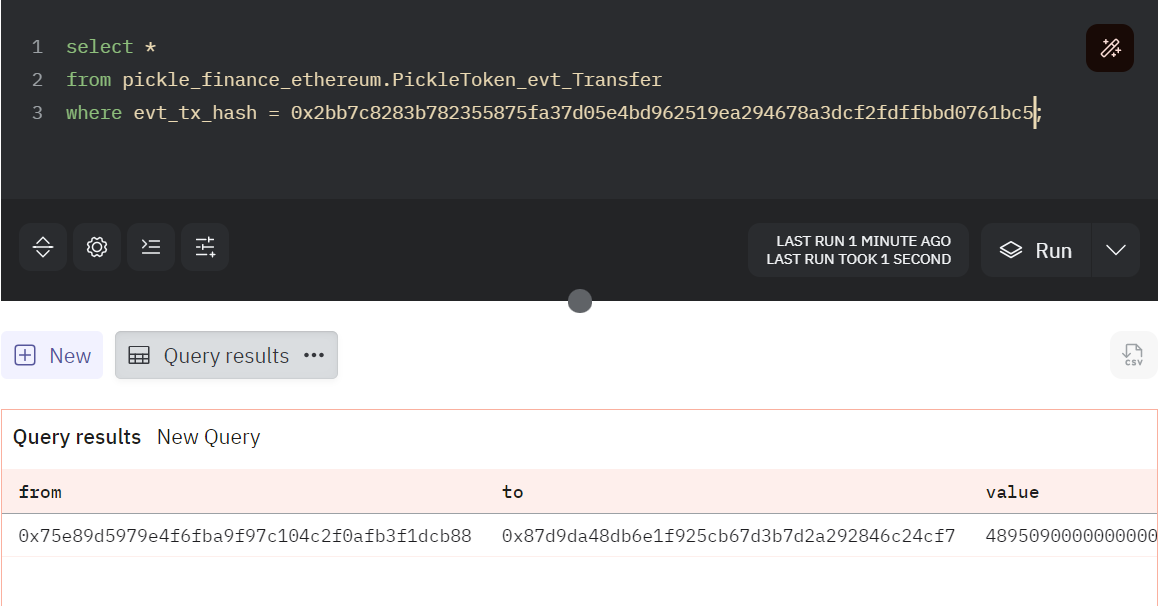

and contract_address = 0x429881672B9AE42b8EbA0E26cD9C73711b891Ca5Now, let’s verify the accuracy of our decoding by switching the view in the Event Logs Explorer from hex to decoded format. The output below matches the results of our manual decoding.

In addition, the PICKLE event logs table is already decoded in Dune, allowing us to query the pickle_finance_ethereum.PickleToken_evt_Transfer table to access the decoded event log.

Next Steps…

The ability to manually decode event logs is crucial for assessing data accuracy, completeness, and consistency before conducting on-chain analysis.

This walkthrough was designed to provide you with a foundational understanding of how the decoding process works for basic data types.

As you continue to query blockchain data, you'll encounter more complex data types like tuples, timestamps, and others. I highly recommend this video walkthrough by Sam-SQL Sunday, which is particularly helpful for learning how to decode complex data types.

On my end, I will keep experimenting with decoding event logs using contract ABIs. If you're interested, I can create guides to share my findings.

The final part of my series on “Working with Raw Data” will focus on decoding Traces. I’ll be sharing that guide in the coming days.

As always, I welcome your feedback on how I can improve and suggestions for future guides to help make your on-chain data career easier.

Reference Tools & Resources to improve your learning